SEO Pagination Issues for ecommerce & how to solve it

Improperly taken care of, pagination can guide to difficulties with finding your articles indexed. Let us consider a look at what those people concerns are, how to keep away from them and some suggested most effective apply.

What is pagination and why is it crucial?

Pagination is when content material has been divided among a series of pages, these kinds of as on ecommerce category webpages or lists of weblog articles or blog posts.

Pagination is 1 of the ways in which website page equity flows via a site.

It’s crucial for Website positioning that it is finished accurately. This is due to the fact the pagination setup will effect how effectively crawlers can crawl and index the two the paginated web pages on their own, and all the one-way links on those internet pages like the aforementioned solution pages and web site listings.

What are the opportunity Search engine optimisation challenges with pagination?

I’ve appear across a handful of weblogs which describe that pagination is bad and that we should block Google from crawling and indexing paginated web pages, in the name of possibly keeping away from duplicate articles or strengthening crawl finances.

This is not rather accurate.

Copy information

Duplicate information is not an challenge with pagination, simply because paginated internet pages will have various information to the other web pages in the sequence.

For case in point, webpage two will list a distinct set of merchandise or weblogs to page just one.

If you have some duplicate on your group webpage, I’d suggest only owning it on the 1st site and eradicating it from further webpages in the sequence. This will support sign to crawlers which page we want to prioritise.

Really don’t fear about copy meta descriptions on paginated internet pages both – meta descriptions are not a rating signal, and Google tends to rewrite them a lot of the time in any case.

Crawl spending budget

Crawl finances is not a thing most websites have to stress about.

Unless your web page has hundreds of thousands of pages or is usually update – like a news publisher or work listing web site – you’re not likely to see critical difficulties occur relating to crawl budget.

If crawl budget is a problem, then optimising to reduce crawling to paginated URLs could be a thing to consider, but this will not be the norm.

So, what is the best strategy? Commonly talking, it is additional useful to have your paginated material crawled and indexed than not.

This is since if we discourage Google from crawling and indexing paginated URLs, we also discourage it from accessing the inbound links inside of those people paginated URLs.

This can make URLs on people further paginated pages, whether or not individuals are merchandise or site articles or blog posts, more challenging for crawlers to entry and lead to them to likely be deindexed.

Immediately after all, inner linking is a critical component of Website positioning and essential in making it possible for people and lookup engines to obtain our content.

So, what is the ideal solution for pagination?

Assuming we want paginated URLs and the written content on those people webpages to be crawled and indexed, there’s a few essential factors to comply with:

- Href anchor backlinks must be utilized to backlink in between various pages. Google does not scroll or simply click, which can direct to problems with “load more” features or infinite scroll implementations

- Every single website page really should have a unique URL, this sort of as class/web site-2, group/website page-3 and so on.

- Every single web page in the sequence must have a self-referencing canonical. On /classification/page-2, the canonical tag should point to /class/site-2.

- All pagination URLs need to be indexable. Do not use a noindex tag on them. This makes certain that look for engines can crawl and index your paginated URLs and, additional importantly, helps make it easier for them to locate the products that sit on those URLs.

- Rel=upcoming/prev markup was made use of to emphasize the romance amongst paginated webpages, but Google said they stopped supporting this in 2019. If you are already making use of rel=up coming/prev markup, go away it in location, but I would not get worried about utilizing it if it is not existing.

As well as linking to the upcoming couple of pages in the sequence, it’s also a excellent strategy to connection to the remaining site in your pagination. This provides Googlebot a nice link to the deepest page in the sequence, reducing click on depth and letting it to be crawled more effectively. This is the method taken on the Hallam weblog:

- Assure the default sorting option on a classification website page of items is by most effective selling or your most popular priority buy. We want to stay away from our most effective-advertising merchandise currently being mentioned on deep webpages, as this can damage their natural and organic overall performance.

You could see paginated URLs start to rank in lookup when ideally you want the primary webpage ranking, as the main web page is possible to produce a improved user experience (UX) and consist of improved articles or merchandise.

You can support stay clear of this by generating it tremendous clear which the ‘priority’ web page is, by ‘de-optimising’ the paginated internet pages:

- Only have class webpage articles on the very first site in the sequence

- Have meta titles dynamically incorporate the page selection at the start out of the tag

- Include things like the page selection in the H1

Prevalent pagination issues

Do not be caught out by these two widespread pagination mistakes!

- Canonicalising again to the root webpage

This is almost certainly the most popular one, whereby /web site-2 would have a canonical tag again to /webpage-1. This typically isn’t a great plan, as it suggests to Googlebot not to crawl the paginated website page (in this circumstance page 2), that means that we make it more durable for Google to crawl all the product URLs outlined on that paginated webpage far too. - Noindexing paginated URLs

Equivalent to the earlier mentioned level, this qualified prospects lookup engines to ignore any ranking indicators from the URLs you’ve used a noindex tag to.

What other pagination options are there?

‘Read more’

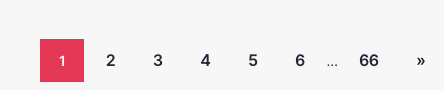

This is when a user reaches the bottom of a group web page and clicks to load a lot more products and solutions.

There’s a couple factors you have to have to be cautious about right here. Google only crawls href inbound links, so as very long as clicking the load far more button continue to uses crawlable hyperlinks and a new URL is loaded, there’s no situation.

This is the latest set up on Asos. A ‘load more’ button is employed, but hovering over the button we can see it is but it is an href hyperlink, a new URL masses and that URL has a self referencing canonical:

If your ‘load more’ button only works with Javascript, with no crawlable links and no new URL for paginated internet pages, which is possibly dangerous as Google may possibly not crawl the written content concealed at the rear of the load much more button.

Infinite scroll

This happens when users scroll to the bottom of a category web site and extra goods automatically load.

I do not basically assume this is excellent for UX. There is no comprehending of how several products are remaining in the sequence, and users who want to access the footer can be still left pissed off.

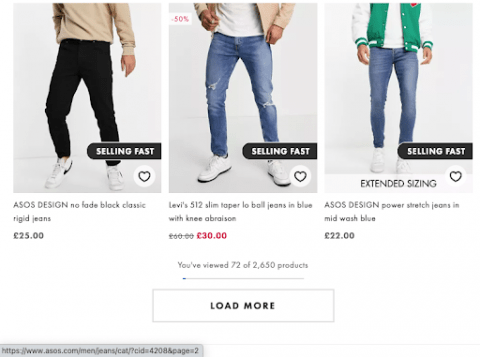

In my quest for a pair of men’s denims, I found this implementation on Asda’s jeans variety on their George subdomain at https://direct.asda.com/.

If you scroll down any of their class webpages, you’ll detect that as more goods are loaded, the URL does not change.

As an alternative, it’s absolutely reliant on Javascript. With out all those href backlinks, this is going to make it trickier for Googlebot to crawl all of the items outlined further than the 1st site.

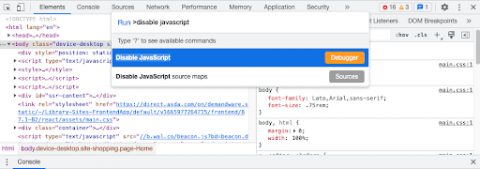

With the two ‘load more’ and infinite scroll, a speedy way to comprehend irrespective of whether Javascript could be producing concerns involving accessing paginated written content is to disable Javascript.

In Chrome, that’s Selection + Command + I to open up dev tools, then Command + Change + P to run a command, then form disable javascript:

Have a click on all around with Javascript disabled and see if the pagination nevertheless functions.

If not, there could be some scope for optimisation. In the illustrations over, Asos however worked wonderful, whilst George was absolutely reliant on JS and not able to use it without the need of it.

Conclusion

When handled improperly, pagination can restrict the visibility of your website’s information. Stay away from this happening by:

- Creating your pagination with crawlable href links that correctly url to the further web pages

- Making certain that only the first webpage in the sequence is optimised by eradicating any ‘SEO content’ from paginated URLs, and insert the web site quantity in title tags.

- Don’t forget that Googlebot does not scroll or simply click, so if a Javascript-reliant load more or infinite scroll solution is utilised, ensure it’s produced search-friendly, with paginated internet pages nevertheless obtainable with Javascript disabled.

I hope you found this information on pagination beneficial, but if you need to have any even more tips or have any thoughts, make sure you do not hesitate to access out to me on LinkedIn or get in touch with a member of our workforce.

If you need support with your Search Motor Optimisation

do not wait to contact us.